Sitecore Publishing Service (SPS) is a replacement for the built-in publish function. It’s built on dotnet core and runs as a separate micro service instead of the built-in publisher that runs in-process.

I’ve been using it, or rather tried to use it, for about a year now on a large Sitecore 9.0.1 solution. It was everything but a smooth ride, so I thought it would be worth sharing my experience and what I learned during the process.

SPS has its clear advantages regarding the speed it publishes content. It’s not as “lightning fast” as Sitecore claims it to be, but still a lot faster than the built-in one. The greatest advantage, in my opinion, is that it runs outside the Sitecore Content Management (CM) worker process. So an ongoing publish processes doesn’t break due to an IIS application pool recycle. Those two reasons were also why we tried moving to SPS.

Note: This post contains my experiences while working with SPS 3.0 to 3.1.3. Some of the issues have been fixed in later versions. Some issues may also remain in SPS 4 as it was released before 3.1.3. Many of the issues turned out to exist in 2.x as well.

How Sitecore Publishing Service works

SPS runs as a separate service and moves data from the master database to the target web databases. When a publish operation starts, SPS builds a manifest describing what needs to be published and it then moves the data as bulk operations to the targets. This also reduces the effect of long latency between authoring system and delivery systems.

SPS should be placed close to the master database, but doesn’t have to be installed on the database server itself, nor does it have to run on a separate machine. Having SPS as a separate web site on the CM server usually works just fine, since the CM server is typically in the same data center as the master database and the memory foot print of SPS is quite small.

When content is edited, the CM server sends signals to SPS about what changes has been made, so that SPS can calculate a manifest of what needs publishing. This is essentially a replacement for the built-in PublishQueue table. Instead, SPS has a set of 11 tables, prefixed with Publishing_, in the master database.

Tip: Watch the size of your

Publishing_ManifestSteptable. It might become really large with millions of rows and can consume gigabytes of table space. Consider changing theJobAgeofPublishJobCleanupTask.

After publishing, SPS signals back to the CM/CD servers what items have been written, so that they can clear affected caches and update search indexes etc.

Installing and upgrading tips

Installing SPS is really very straight forward. It’s well documented, so I don’t need to repeat that here. A great quick and solid guide is Stephen Popes Quick Start Guide.

As SPS is mostly suitable on large solutions, one can expect certain operations to take some time. However, I’ve found the default timeout settings in SPS to be too short. To rule out weird errors, start with increasing the configured timeouts in the SPS config file /config/sitecore/publishing/sc.publishing.xml. In this file, there are a set of DefaultCommandTimeout and CommandTimeout elements. Increase the short 10s timeout to something like 60 seconds, and the larger 120s timeouts to something large, such as 7200 (2 hours). A lot of intermittent errors went away when I increased those timeouts.

By default, SPS writes all the publishing operations in one transaction. This is pretty good from an architectural point of view, so that a publish operation can be roll backed in case of an error. However, pushing thousands of items in one large transaction, is quite heavy on the target database. In reality I haven’t got transactions to work in a good way, as commit sets easily becomes too large to deal with. I’ve found it better to just disable transactions. This can be done by setting TransactionalPromote option to false in sc.publishing.service.xml. Add the <Options> sections if doesn’t already exists, or update the options set according to below:

<IncrementalPublishHandler>

<Type>Sitecore.Framework.Publishing.PublishJobQueue.Handlers.IncrementalPublishHandler, Sitecore.Framework.Publishing</Type>

<As>Sitecore.Framework.Publishing.PublishJobQueue.IPublishJobHandler, Sitecore.Framework.Publishing.Service.Abstractions</As>

<Options>

<TransactionalPromote>false</TransactionalPromote>

</Options>

</IncrementalPublishHandler>

<TreePublishHandler>

<Type>Sitecore.Framework.Publishing.PublishJobQueue.Handlers.TreePublishHandler, Sitecore.Framework.Publishing</Type>

<As>Sitecore.Framework.Publishing.PublishJobQueue.IPublishJobHandler, Sitecore.Framework.Publishing.Service.Abstractions</As>

<Options>

<TransactionalPromote>false</TransactionalPromote>

</Options>

</TreePublishHandler>

In production environment, I’ve struggled with proper encryption of the connection strings in order to avoid having database credentials in clear text. You can’t use regular aspnet_regiis.exe -pef "connectionStrings" . command for the SPS site. I learned that Microsoft have changed a lot how configuration works in dotnet core. See this post on Sitecore Stack Exchange for more details.

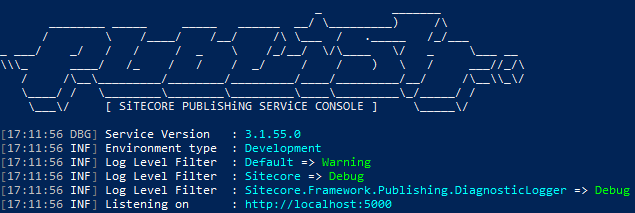

Tip: Running SPS in development mode makes debugging a lot easier. To easily switch between IIS hosted SPS and development mode; have IIS listen to

localhost:5000, as it’s the default endpoint for development mode. You can just stop the IIS SPS application and start it from a console without changing the Sitecore CM config files and thereby avoid a heavy IIS recycle.

When it comes to upgrades, I’ve found it easier to just replace the DLL files and any changed config files and just install the Sitecore module when applicable. This has worked fine for 2.x and 3.x upgrades. Always check that the database schema hasn’t changed by issuing the .\Sitecore.Framework.Publishing.Host.exe schema upgrade command.

When upgrading to SPS 3.1.3, Sitecore may stop working due to a DLL conflict. Sitecore would then give a yellow screen claiming it can’t load System.Net.Http. Sitecore has released a knowledge base article about this: https://kb.sitecore.net/en/Articles/2019/05/29/13/55/084098.aspx

Once SPS is installed, it might be useful to sometimes use the built-in publisher for testing and debugging purposes. It’s relatively easy to switch between the two publishing engines. To disable SPS, perform the following operations:

- Disable the SPS config files: In Sitecore 9+, add

mode="off"to the SPS module inLayers.config. In 8.2 you disable the config files in the old fashion way - Rename the

core:/sitecore/system/Aliases/Applications/Publishitem to something different, such asPublishDisabled.

Reverse the operation to re-enable SPS.

User Experience for Sitecore editors

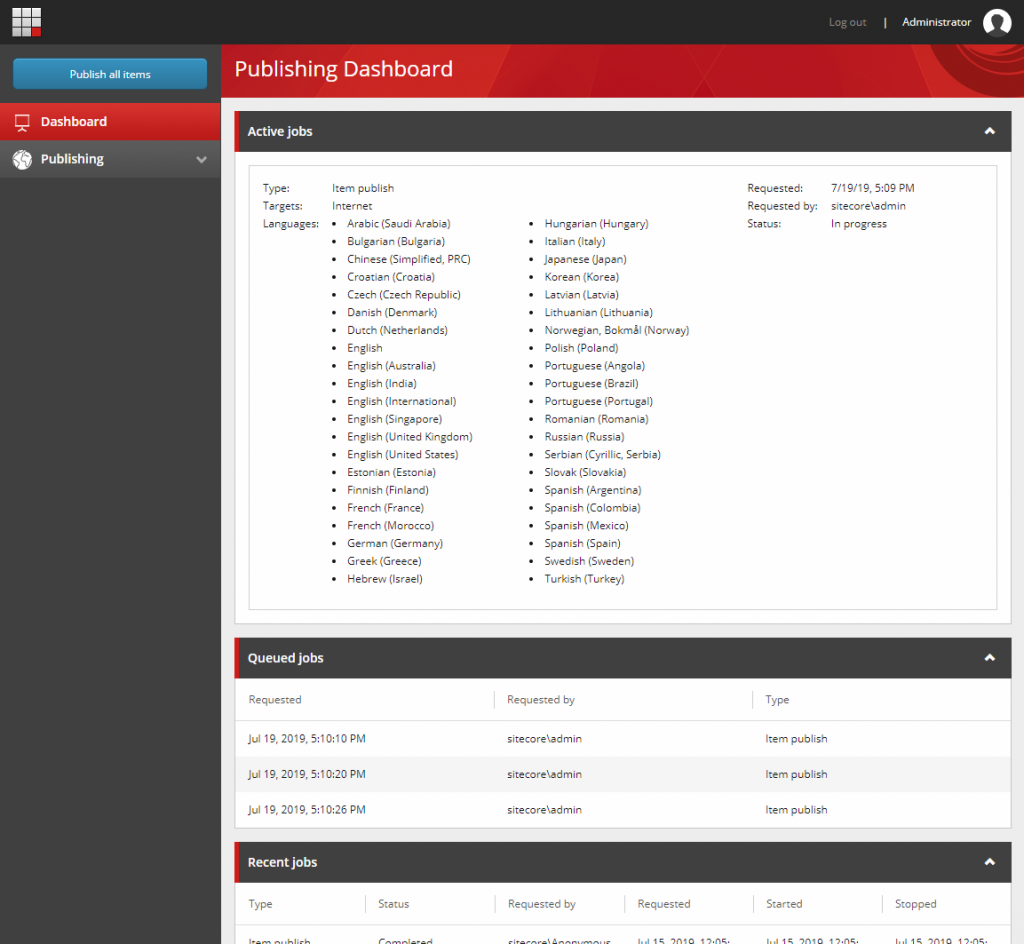

SPS comes with a new publishing UI for the Sitecore editors. The publish dialog looks a bit different and there is a new dashboard showing the status of all publish operations. A publish dashboard showing running publish processes and the publish queue is a good step forward.

Sitecore has a bad habit of building non-scalable UI’s and providing unsorted language lists to authors. SPS is no exception. The list of languages in the publishing dialog is in random order and is cranked into a small letterbox, while the checkbox(es) for target databases consumes half the UI. I’ve written about this in a previous post and this was mostly fixed in SPS 3.1.3 (Issue 500352).

The SPS publishing dashboard shows a list of queued publish jobs, a list of completed jobs, and the current job being published. However, SPS does not show any indication of the progress of the current job, as the built-in publisher does. There is no way for an author to know if the publish operation is running properly, nor is there any hints of how long it might take to finish. The sometimes long running operation of moving the content from master to the target databases doesn’t even show anything in the logs unless debug logging is enabled.

In my opinion, SPS should show the number of processed items, similar as the built-in publisher does. Making one counter with an ETA might not be easy, as SPS performs the publishing in multiple steps: Building the manifest and then promote the content to the targets in parallel. But I think it’s totally fine to have one or multiple counters with just incrementing numbers, as long as authors can see that it’s actually doing something. The built-in publisher does count published items in this way.

One major drawback of the SPS UI is that it claims a publish operation is complete, when in reality it’s not. SPS marks a job as complete as soon as all the content is moved to the target databases. In reality, the publish operation is only half done at this stage. More details about this below.

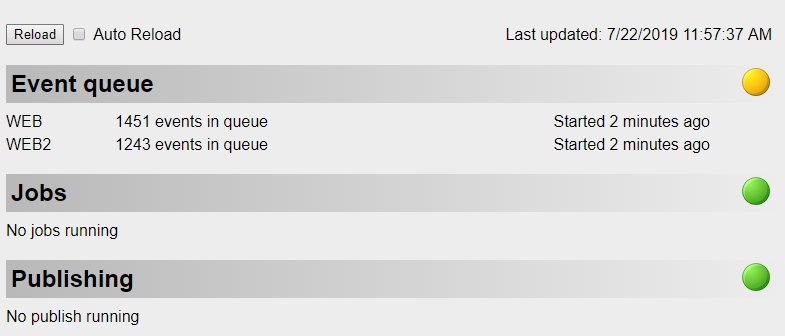

Under the hood

As part of writing the content to the target databases, SPS also creates SavedItemRemoteEvent events for every written item version, that are raised globally. This means all Sitecore servers will receive a bunch of events that needs to be processed. Those events will be processed sequentially in a single thread. They will typically trigger cache clearing and updates of the search index. Exactly what operations are performed depends on what’s in the event pipelines. In practice, this means a publish operation is not complete until the event queue is processed. Up until then, Sitecore may show old cached content or give incorrect search results etc. This has generated a lot of support tickets from our authors, claiming the publishing isn’t working.

In order to avoid the event queue to become extremely large, SPS has a threshold on how many events can be raised. By default this is 1,000 items, configured by the remoteEventCacheClearingThreshold parameter in the publishEndResultBatch pipeline. This means that if SPS publishes more than 1,000 items in one operation, SPS won’t raise a single event for every written item version. Instead a separate event is raised that will clear the entire cache and trigger a complete index rebuild. This can be pretty annoying for large sites that rely heavily on Content Search. The site I’ve been working on needs roughly 4-5 hours to rebuild the search indexes. In practice, this means editors won’t see their published changes until some hours after a complete publish. I haven’t found a golden figure to put in the cache clearing threshold parameter, but for me 10,000 was a quite good number. On large publish operations, editors may need to wait half an hour or so after a “complete” publish (according to SPS) to see the changes on the site, since the servers may need to process thousands events. But at least it avoid most index rebuilds that would delay the index update a lot.

My colleague Mats did a nice status window that looks at the publish queue, the event queue and running jobs. With this, authors can somewhat see when their finished publish jobs have actually been completed.

This architectural issue of raising item saved events, becomes even worse when having language fallback enabled. This makes SPS raise events for every installed language, regardless of what versions are actually affected (issue 524299). In reality, SPS only needs to raise such events for 1) item language versions that actually exists on the target item and 2) the language fallback is configured for the target language and 3) the item template is affected by fallback. This issue caused us huge pains until we got this sorted out properly. With 40+ configured languages, a site publish with a few thousand items, easily generated over 100,000 events. It really kills the queue to the extent that many events are deleted by the CleanupEventQueue agent before they are processed.

As mentioned above, one of the event actions is to remove updated items from the cache. On large solutions, the caches may be quite large too. Removing single items from the cache involves looping through all the cache keys and remove the applicable ones. This can also be quite slow, since a long event queue means looping over all the cache keys over and over again. It is therefore recommended to set the Caching.CacheKeyIndexingEnabled.ItemCache setting to true on Content Delivery server roles. However, leave it turned off on Content Management servers as the Sitecore config file states “enabling this setting on content management servers with many editors, many languages, and/or many versions can degrade performance”. Leaving the setting turned off on CM servers is usually okay, since they typically don’t have many items from the web databases in the local cache.

Editing content

Talking about ItemSaved events, SPS adds event handlers to the authoring environment as well. As mentioned previously, its main purpose is to signal to SPS what has been changed so that SPS knows what items to consider when building the publishing manifest. The idea is great, but the implementation needs a lot of improvements and have a couple of issues.

As part of tracking changes, SPS also relies on the __Revision field. So when an author changes an unversioned field, the SPS ItemSaved event handler needs to update the revision guid on all versions and when a shared field is changed, all item versions, on all languages needs a new revision guid. That in turn triggers a chain of new ItemSaved events.

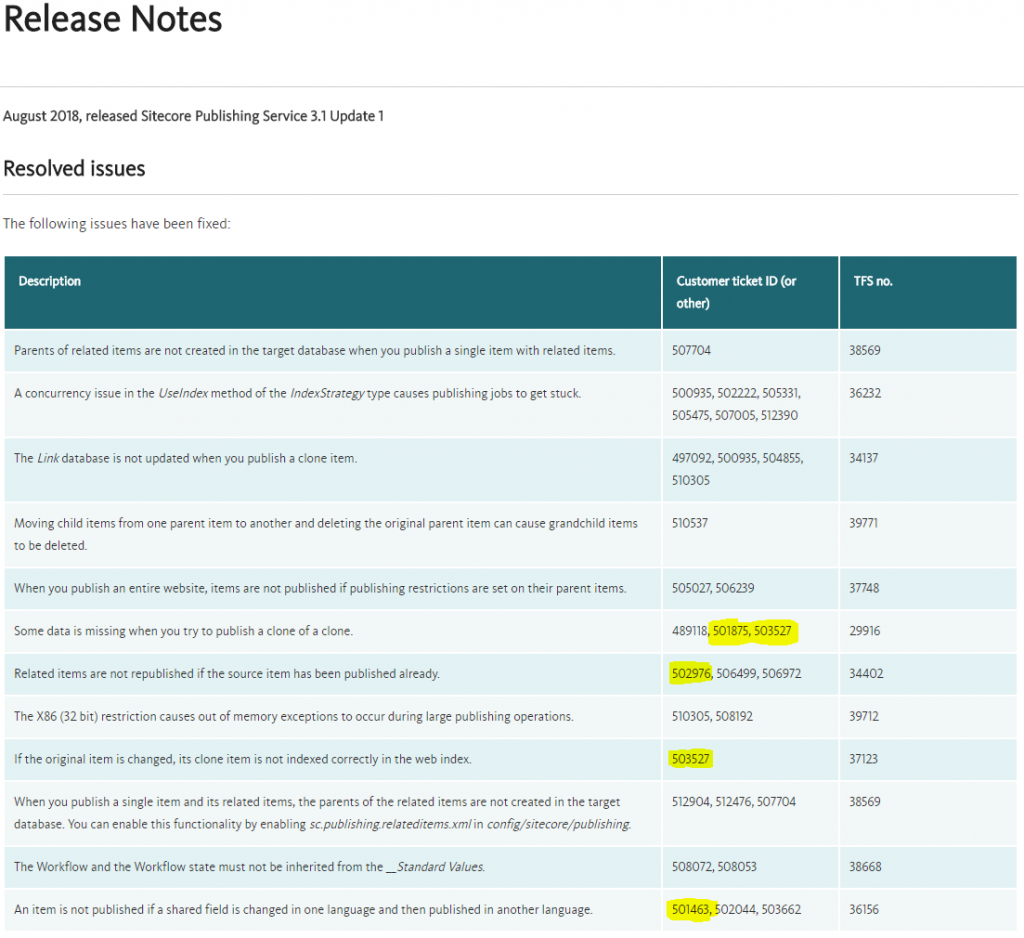

SPS had some issues with actually updating the correct revisions (issue 501463, partly fixed in 3.1.1) depending on what field types you’ve changed. Most of this is fixed now, but when writing this, it still doesn’t deal with language fallback properly. The chain of ItemSaved events also have a great performance impact on the authoring environment when there are multiple versions of items. This can be addressed by updating the revision fields in silent mode and manually signal SPS about the updates. See my previous blog post “Improving Editing Performance when using Sitecore Publish Service” for details.

Tip: I once found a few items in the

masterdatabase having an incorrect format of the guid in the__Revisionfield. The revision field must be a lower case guid with dashes and without braces. Other formats will cause SPS to crash. I have no idea how I got some incorrect guid formats in the first place, but once found it was an easy fix to just replace them.

In my opinion, SPS should only get signals of what items have actually been changed. The message to SPS could be a bit more verbose, including a list of changed fields etc. Thereby, SPS could have a background process that resolves what item versions needs publishing. This would also enable a more logic implementation of “publish related items”. The current implementation has led to a lot of complains in the community about SPS not publishing the expected related items, though I haven’t had much issues with this myself.

Tip: Enable the

sc.publishing.relateditems.xmlconfig file in SPS could help getting a more predictive publish result.

Another common source of frustration among authors is incremental publish, also referred as “site publish”. Its purpose is of course to publish everything that has been changed since the last publish. However, SPS has a bad habit of loosing some of those notifications from CM to SPS I described above. According to some Sitecore staff I’ve talked to, this seems to be a known problem. For us it happens on a daily basis, though I haven’t found a solid way to reproduce this issue. With the SPS module, Sitecore introduced the Polly library. This is a great framework for handling retry policies, circuit breakers, bulkhead isolation, timeouts etc. Perhaps incorrect or incomplete usage of this library could be the cause loosing notifications? Just a guess as I haven’t dug into the code, but may be worth a deeper look.

Update: We’ve also experienced a problem where items “randomly” disappears from the web database, but we’ve been unable to reproduce this error. This problem kept coming back, but we couldn’t find anything in the logs about this. It got to a stage where we added a bunch of watches on the web database that fired an alarm if the monitored item was missing. And it fired, and we started looking into all the details and finally we did find a small trace. The Publishing_ManifestStep database contained contained DeletedItem events. It turned out that if a publishable clone is pointing to a source item that isn’t in a publishable workflow state, the clone is unpublished during a “Site publish” operation, but it’s handled correctly during an “Item publish” operation. So if anything is changed in master database causing SPS to consider the item for publishing, the item will be deleted in web, if the source is not publishable. Publishing the item again using “Item publish” will re-publish it again. Sitecore has now created a fix for this.

Other issues and updates

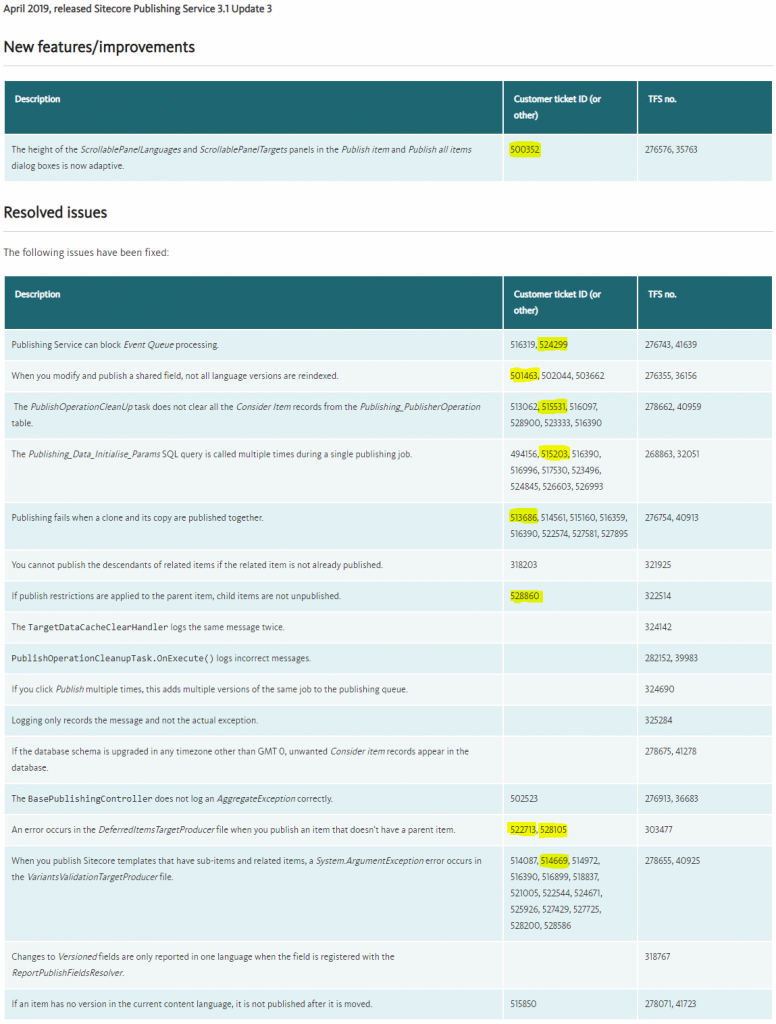

While working on this project, Sitecore released a few updates on SPS, including many fixes to my filed tickets. It sometimes feels like I’ve filed most of them, but I’ve only filed the highlighted ones in the release notes:

Below is an unsorted list of some issues I’ve had during this project. If you find similar problems, it could be worth filing a ticket

- Solr Search indexes may not be properly updated after publish when a shared or unversioned field is edited, or when a field involving language fallback needs index update (Issue 501463)

- If an item is moved in the master database between two publish operations, SPS may incorrectly remove valid item versions from the web database. (Issue 498362)

- A published clone item may be incorrectly unpublished, if the clone’s source item’s first version isn’t publishable (Issue 520696)

- Clones of clones may not be published or cause SPS to crash (Issue 501807, 501875)

- Some content of cloned items isn’t published or indexed properly when language fallback is used. (Issue 503527)

- Related items are not published correctly. (Issue 502976)

- SPS crashes with a “Duplicate key exception” when promoting clones or when performing variant validation. (Issue 513686, 514669)

- SPS may deadlock or go into an infinite restart loop (Issue 515203, 522713)

- SPS adds “Consider items” notifications to its database with a far future date (

DateTime.Max), causing changed items not to be included in a site publish and also causing the database to be filled up with records that’ll never be removed. (Issue 515531) - If an field change only include case changes, such as changing “foo” to “Foo”, the changes won’t be published. When writing this, and as far as I’m aware, all current versions of SPS needs a patch for this. See Sitecore.Support.290996 (Issue 524599)

- When language fallback is enabled, far too many item save events are created, as one event is created for all languages available in the system, regardless if the versions exists or the event is actually needed. (Issue 524299)

- SPS may crash with an

InvalidOperationExceptionerror, caused by conflicting patches of previous issues. (Issue 528105) - When pages are unpublished, descendant items are not unpublished nor removed from indexes. (Issue 528860)

- When an author removes a language from a published item, SPS may create surplus records in Solr web index after publish, representing item versions that have never existed. (Issue 531371)

- Site publish incorrectly delete clone items from web database if its source item is not in a publishable workflow step. (Issue 540585)

What Sitecore should fix in SPS

There are many things that Sitecore should fix in order to make SPS a realistic replacement for the built-in publisher. I’ve compiled an unsorted list of things I think is reasonable for Sitecore to solve in the near future. Most of them shouldn’t really be too hard to do.

- The UI should have a clear progress indication, so that a content editor can see if a publish operation is actually being performed or if it has just hung.

- The UI should show more information about a running job. Today the user gets more details from queued jobs and finished jobs, than the one that is actually running.

- The logging level should align between building the manifest and promoting the items. Today one have to enable debug logging in order to distinguish between a running item promotion operation and a hung process.

- The publish dialog needs to be improved. Even though the most severe issues are fixed in 3.1.3, there are still many things that needs improvements, such as ability to pre-select/lock publish targets.

- Language lists should be sorted (consistently through out the platform). From 3.1.3 it’s at least sorted, but horizontally and the UI is displayed in columns.

- Double-clicking a publish job shouldn’t submit multiple jobs (fixed in 3.1.3)

- Editors should be able to cancel queued jobs.

- If a user leaves the SPS dashboard open for a few hours, the browser crashes.

- The database query executed when viewing recent jobs is really heavy. It does a huge database join that usually causes the query to time out. With the current implementation, an author can easily, and unintentionally, perform a Denial of Service (DoS) operation on the CM/DB.

- Logging needs to be improved a lot. When something goes wrong, there’s typically no indication of what caused the error. A duplicate key exception with just a manifest id isn’t of much help.

- When a job fails, it just starts over and over again in an infinite(?) loop. If the job in the front of the queue continues to fails several times, it should be discarded.

- The signals between CM and SPS sometimes fails. When SPS misses save events, it doesn’t know what to publish. This must be made much more robust so that authors can rely on “Site publish”.

- Determining what items needs to be published should be done in a more solid and efficient way. Writing a new revision field values are not sustainable in the long run.

- Finding what was published / unpublished is tricky. It would make sense to get such report in the SPS UI.

- Consider open sourcing SPS, and other micro services, or at least open it up to a selected user group. Code written in dotnet core is basically unreadable when reflecting the DLL’s. This makes it virtually impossible for partners to nail issues. Instead, much more time is spent on patch management on the partners/client side and probably also on the Sitecore support side, as less accurate issue data can be provided when filing a ticket.

- Even though cache clearing and index update isn’t part of SPS itself, it’s part of the publish user experience. This has led to to tons of issues, both from an accuracy perspective and user experience. It just annoys editors when SPS claims a publish is complete and the CD’s are processing cache clear messages on the event queue for an hour or so. Consider bundling several “item saved” messages into single events to improve both indexing and cache clear performance.

- Also not part of SPS itself, but other parts of Sitecore, such as EXM, publishes individual items. This may be efficient for the legacy publisher, but not for SPS. I’ve experienced several problems, such as timeouts in EXM, where there’s simply too many jobs added to the queue.

Above are probably the easy fixes. To make SPS more versatile, I’d also suggest making it more “modular”, so that content processing can be done during publishing. It makes sense to do heavy and/or repeated computations during publishing, so that the published data is as close to the end output as possible. Looking at a regular web page, most of it is basically “static” data, i.e. the output is virtually the same for every request. It’s quite common that we have a lot of if-statements etc in our code that’ll just check if content is entered, if links are valid, if images exists and so on. If we can do some of the processing during publish time, we can avoid a lot of repetitive CPU work. Clones are a good example of this. When a clone is published, it is “uncloned” so that the source values are written to the clone. Thereby all the clone computation doesn’t have to be done on the CD servers. We could do much more work similar to this during publish time, such as dealing with language fallback and standard values, rewrite links etc. I’ve seen a few solutions where one haven’t used SPS as this kind of publish processing was required. This can be done quite easily with the built-in publisher. See my post on flattening the Sitecore web database as one example.

When to use SPS

As seen above, SPS is in my opinion very much an experimental product, not yet ready for production. It had close to ten releases when I jumped on the train, so I thought it would be somewhat stable by then, but I was very wrong.

If you have a large solution, where the built-in publisher just won’t work anymore, it might be worth giving SPS a try. This was the sole reason why I did.

If the built-in publisher is frustratingly slow, such as a few hours for a full publish and your site doesn’t utilize clones, language fallback, use limited caches and doesn’t rely too heavily on accurate content search indexes, SPS might also be worth a try.

I’d recommend everyone else staying far away from SPS, given its current state when writing this.

What’s upcoming in future SPS versions

During SUGCON in London, April 4-5, Sitecore presented what’s upcoming in future versions of SPS. One challenge Sitecore is having with current SPS versions, is that there is separate code for “item publish” and “incremental publish”. The goal is to merge this into one code set. In order to do this, future SPS versions will be able to publish a set of items, or item branches. Thereby an incremental publish becomes a set of single item publishes, so that the same code can be used to perform the publish. This will probably be a good leap forward, as it will probably reduce the complexity of the product.

Sitecore is also working on making SPS redundant in a way where several SPS nodes decides which one should do the job and the others are stand by. Cool feature, but I really can’t understand why Sitecore is prioritizing this feature in favor of application stability, accuracy and better user experience. A failing job due to a logic error would cause all SPS nodes to fail anyway, regardless of how many you have. My list above of suggested improvements have been shared with Sitecore, so I hope those gets into the product very soon.

Really interesting read Mikael. We are following in your footsteps with a large installation, I will definitely be keeping an eye on those events!

Nice post Mikael, thanks.

Pingback: Deep dive to Content Availability feature in Publishing Service – Let`s Share

This is a really great post and I thank you for sharing. I see from one of your screen shots that you have 2 publishing targets “web” and “web2”. I’m using SPS and have a couple of questions regarding the second target.

1) Does the SPS dashboard show the second target (“web2”) as “Failed” for your solution? My SPS logs show that the publish operation succeeds to both targets, but the UI claims the second target “Failed” for no apparent reason. There are no errors anywhere, even with “Development” mode enabled in SPS. The publish definitely succeeds, as updated content is seen on the second target CD.

2) Your CM obviously refers to the second target as “web2” but does your CD attached to “web2” refer to that database as “web2” or “web”? I ask because I did a lot of work to rename “web” to “webus” (our “web2”) on our second CD. It works for the most part but we had errors to do with personalization and found there were some hard coded “web” strings in the Sitecore code which made me despair and go back to calling it “web”. However, in doing so I seem to have some issues with processing remote events and I wonder if it’s because my CM calls it “web2” but the CD knows it as “web”. Can you comment on this at all?

Thanks again.

P.s. I’m running SC 8.2 u2

Thank you!

Well spotted that I use two targets 🙂 I’ve considered writing a separate post on this, describing its benefits and how I made it work. Your question gives me a good reason to write that post.

Publish to both targets shows “Success”, but I recall having the same error on a 8.2u5 solution once. I don’t remember exactly what caused it, but I think it was a small config mistake.

On the “web2” server, I have configured it to use a “web2” database, and I’m also keeping a copy to the same database with the “web” name, since there are hard coded references to web as you’ve discovered too. The main reason to use “web2” on that CD is that my CM is taking care of the Solr indexing and it puts the database name into the index as a hard filter.

Thanks – that’s really interesting as I was considering adding a dummy “web” database to account for the hard coded refs as you describe. When you say “copy” – do you mean it’s just a config definition pointing to the same database, or an actual physically separate db (replicated or empty)?

I hadn’t considered the index issue as we use separate SOLR Cloud clusters for CM and CD1 (web) and CD2 (web2) but that’s a very good point to consider.

I made a copy of the config/connection string, both pointing to the same database.

Pingback: Content Availability, Solr and thelepathy – Let`s Share

Really interesting and helpful post Mikael. I was looking for something exactly like this. Thanks!

Pingback: Reduce page load times after publishing many items and prevent full index rebuild while using Sitecore Publishing Service – Sitecore Memories